MBI Videos

Jonathan Pillow

-

Jonathan Pillow

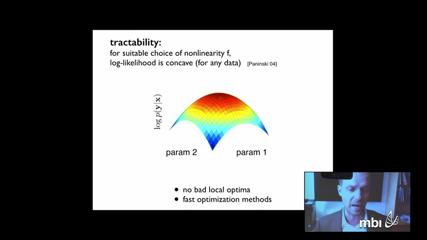

Jonathan PillowBarlow's "efficient coding hypothesis" asserts that neurons should maximize the information they convey about stimuli. This idea has provided a guiding theoretical framework for the study of coding in neural systems, and has sparked a great many studies of decorrelation and efficiency in early sensory areas. A more recent theory, the "Bayesian brain hypothesis", asserts that neural responses encode posterior distributions in order to support Bayesian inference.

However, these two theories have not yet been formally connected. In this talk, I will introduce a Bayesian theory of efficient coding, which has Barlow's framework as a special case. I will argue that there is nothing privileged about information-maximizing codes: they are ideal when one wishes minimize entropy, but they can be substantially suboptimal in other cases. Moreover, codes optimized for information transfer may differ strongly from codes optimized for other loss functions. Bayesian efficient coding substantially enlarges the family of normatively optimal codes and provides a general framework for understanding the principles of sensory encoding. I will derive Bayesian efficient codes for a few simple examples and show an application to neural data.

-

Jonathan Pillow

Jonathan Pillow